- Random findings can be misidentified as significant.

- Methodological problems can be overlooked by reviewers.

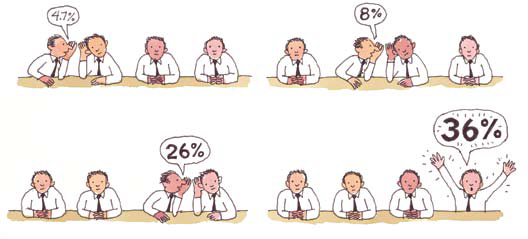

- And popular reporting can misrepresent or even exaggerate the original findings.

- The dangers are especially present if the finding is suggestive and the study is underpowered for the job of resolving the correlations in the data.[1]

Statistics bias and flaws reflect strong incentives

Statistics are one of the standard types of evidence used by people in our society.

Activists trying to gain recognition for what they believe is a big problem will offer statistics that seem to prove that the problem is indeed a big one (and they may choose to downplay, ignore, or dispute any statistics that might make it seem smaller).

…experts… seem more important if their subject is a big, important problem.

The media favor disturbing statistics about big problems because big problems make more interesting, more compelling news…

Politicians use statistics to persuade us that they understand society’s problems and that they deserve our support.

Every statistic… is the product of choices—the choice between defining a category broadly or narrowly, the choice of one measurement over another, the choice of a sample. People choose definitions, measurements, and samples for all sorts of reasons: perhaps they want to emphasize some aspect of a problem; perhaps it is easier or cheaper to gather data in a particular way—many considerations can come into play.

Statistics bias and flaws can be checked out

The issue is whether a particular statistic’s flaws are severe enough to damage its usefulness.

It would be nice to have a checklist… potential problems with definitions, measurements, sampling, mutation, and so on.

- Who produced the number, and what interests might they have?

- What might be the sources for this number? How could one go about producing the figure?

- What are the different ways key terms might have been defined, and which definitions have been chosen? Is the definition so broad that it encompasses too many false positives (or so narrow that it excludes too many false negatives)? How would changing the definition alter the statistic?

- How might the phenomena be measured, and which measurement choices have been made?

- What sort of sample was gathered, and how might that sample affect the result?

- And how is the statistic used? Is it being interpreted appropriately, or has its meaning been mangled to create a mutant statistic?

- Are comparisons being made, and if so, are the comparisons appropriate? Are there competing statistics? If so, what stakes do the opponents have in the issue, and how are those stakes likely to affect their use of statistics? And is it possible to figure out why the statistics seem to disagree, what the differences are in the ways the competing sides are using figures?

In practice… the Critical need not investigate the origin of every statistic. When confronted with an interesting number, they may try to learn more, to evaluate, to weigh the figure’s strengths and weaknesses.

Statistics bias and flaws turn up in every kind of evidence

…this Critical approach… ought to apply to all the evidence we encounter when we scan a news report, or listen to a speech, whenever we learn about social problems.

Claims about social problems often feature dramatic, compelling examples; the Critical might ask whether an example is likely to be a typical case or an extreme, exceptional instance.

Claims about social problems often include quotations from different sources, and the Critical might wonder why those sources have spoken and why they have been quoted: Do they have particular expertise? Do they stand to benefit if they influence others?

Claims about social problems usually involve arguments about the problem’s causes and potential solutions. The Critical might ask whether these arguments are convincing. Are they logical? Does the proposed solution seem feasible and appropriate? And so on.

Being Critical—adopting a skeptical, analytical stance when confronted with claims—is an approach that goes far beyond simply dealing with statistics.[2]

- Gelman, Andrew, and David Weakliem. “Of beauty, sex and power: Too little attention has been paid to the statistical challenges in estimating small effects.” American Scientist 97.4 (2009): 310-316.

- Best, Joel. Damned Lies and Statistics: Untangling Numbers from the Media, Politicians, and Activists. Updated edition, University of California Press, 2012, Scribd pp. 32, 178-182.